神经网络层 利用Pytorch可以快速建立神经网络的层。

全连接层 1 2 3 4 5 6 import torch.nn as nn import torchx = torch.tensor([1. ,2. ,3. ]) fc = nn.Linear(in_features=3 ,out_features=5 ) y = fc(x) y

1 tensor([ 0.0927, 0.6689, 1.1373, -1.7523, -0.4160], grad_fn=<AddBackward0>)

卷积层 1 2 3 4 5 6 7 8 9 10 11 12 13 img = torch.tensor([[ [1. ,2. ,3. ], [4. ,5. ,6. ], [7. ,8. ,9. ] ]]) conv = nn.Conv2d( in_channels=1 , out_channels=2 , kernel_size=3 , stride=1 , padding=1 ) img_y = conv(img) img_y

1 2 3 4 5 6 7 tensor([[[-1.0517, -0.2713, 0.7135], [-2.1632, -1.0055, 0.8507], [ 0.1104, -0.6632, -0.3824]], [[-0.1677, -0.8115, -0.1732], [-0.7791, -2.5789, -1.3892], [-0.2048, -1.3412, -2.8327]]], grad_fn=<SqueezeBackward1>)

池化层 1 2 3 4 5 6 7 pool = nn.MaxPool2d(2 ) X = torch.tensor( [[[1. ,2. ,3. ,4. ], [5. ,6. ,7. ,8. ]]] ) Y = pool(X) Y

以上是几种常用的神经网络层。

激活函数 在神经网络层与层之间,通常会增加激活函数来为神经网络引入非线性变化。

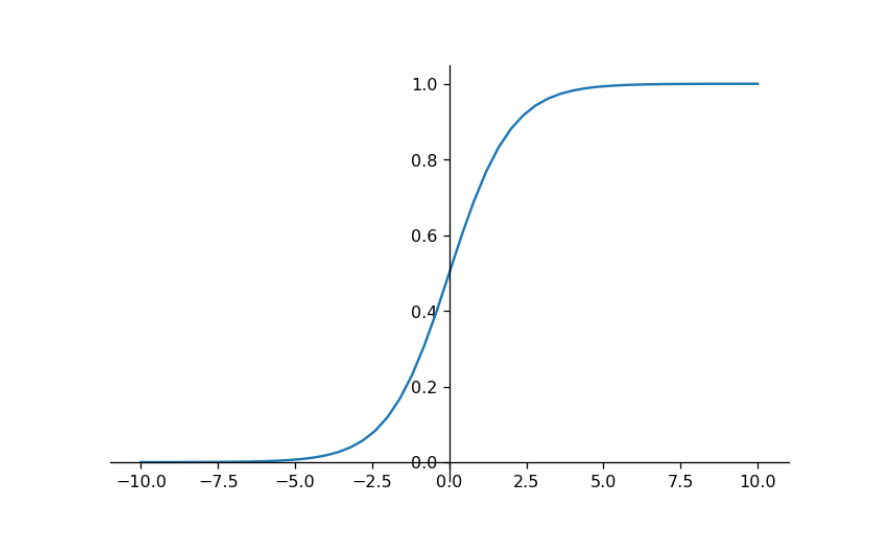

Sigmoid \(y = \frac{1}{1+e^{-x}}\)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 import numpy as npact1 = nn.Sigmoid() x = torch.tensor(np.linspace(-10 ,10 ,51 )) y = act1(x) import matplotlib.pyplot as pltfig, ax = plt.subplots() ax.spines['right' ].set_color('none' ) ax.spines['top' ].set_color('none' ) ax.xaxis.set_ticks_position('bottom' ) ax.yaxis.set_ticks_position('left' ) ax.spines['bottom' ].set_position(('data' , 0 )) ax.spines['left' ].set_position(('data' , 0 )) plt.plot(x,y) plt.show()

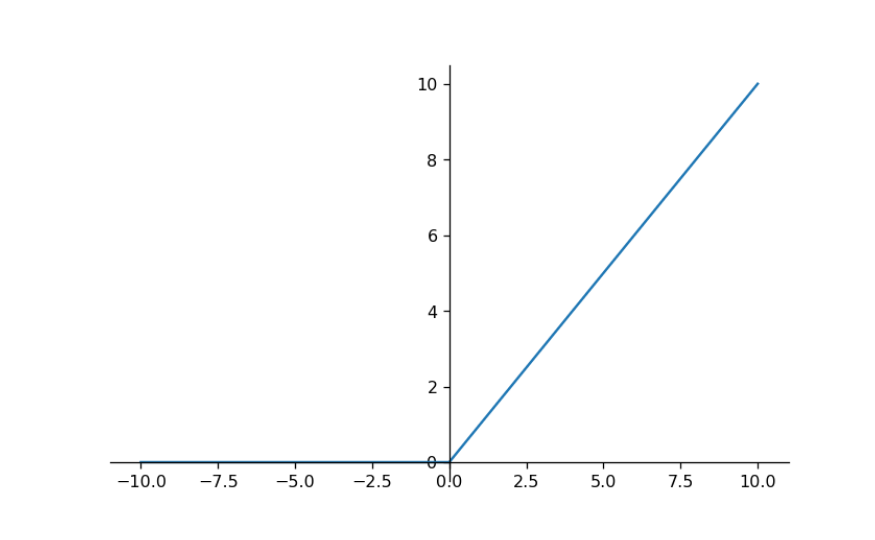

ReLU

$$

y =

\begin{cases}

x, &x \ge\ 0\\

0, &x \lt\ 0

\end{cases}

$$

1 2 3 4 5 6 7 8 9 10 11 12 act2 = nn.ReLU() x = torch.tensor(np.linspace(-10 ,10 ,51 )) y = act2(x) ax.clear() ax.spines['right' ].set_color('none' ) ax.spines['top' ].set_color('none' ) ax.xaxis.set_ticks_position('bottom' ) ax.yaxis.set_ticks_position('left' ) ax.spines['bottom' ].set_position(('data' , 0 )) ax.spines['left' ].set_position(('data' , 0 )) plt.plot(x,y) plt.show()

以上是两种常用的激活函数。

神经网络类 用Pytorch框架可以优雅、快速地搭建一个神经网络。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 class Net (nn.Module): def __init__ (self ): super ().__init__() self.fc1 = nn.Linear(in_features=2 ,out_features=3 ) self.act1 = nn.ReLU() self.fc2 = nn.Linear(in_features=3 ,out_features=1 ) def forward (self,x ): x = self.fc1(x) x = self.act1(x) x = self.fc2(x) return x features = torch.randn(3 ,2 ) features model = Net() model(features)

1 2 3 4 5 6 tensor([[ 1.1749, -1.0738], [-0.3788, -1.6800], [-0.4832, 0.3045]]) tensor([[0.4686], [0.5508], [0.1524]], grad_fn=<AddmmBackward0>)